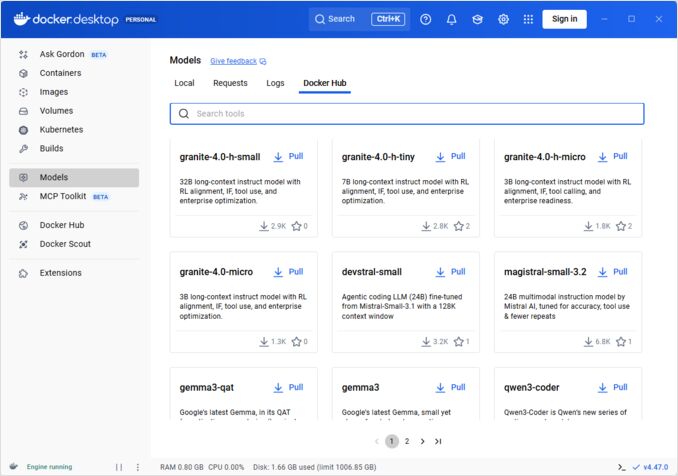

Compare Docker's new Model Runner with Ollama for local LLM deployment.

Detailed analysis of performance, ease of use, GPU support, API compatibility, and when to choose each solution for the AI workflow in 2025:

https://www.glukhov.org/post/2025/10/docker-model-runner-vs-ollama-comparison/

#llm #devops #selfhosting #ai #docker #ollama