LLM Development Ecosystem: Backends, Frontends & RAG

Comprehensive guide/set of articles about building production-ready LLM applications.

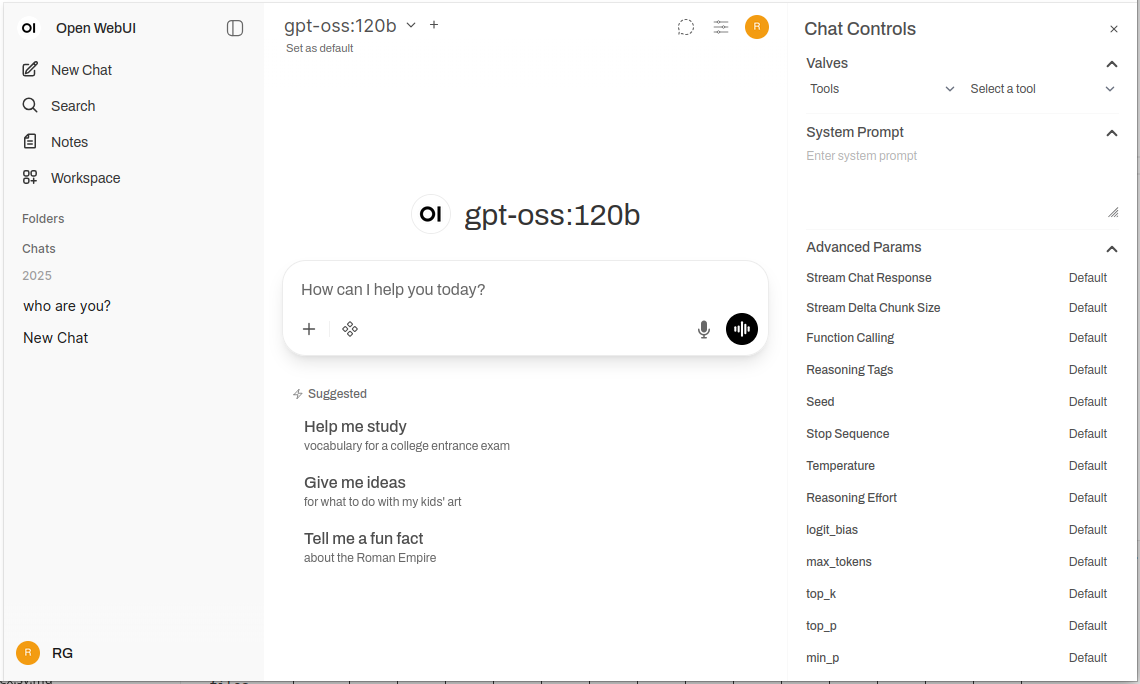

Exploring LLM hosting solutions (Ollama, Docker Model Runner, cloud providers),

client implementations in Python and Go,

RAG architectures, vector stores, embeddings,

MCP servers, and practical deployment strategies:

https://glukhov.au/posts/2026/llm-development-ecosystem/

#llm #ai #python #go #golang #ollama #rag #mcp #docker #selfhosting #devops #coding #dev #devops